TL;DR: Existing remote laboratories typically have trust issues, and it’s holding them back. Proposal: Use a zero-trust philosophy in the equipment side of the infrastructure (via UMA2.0) so as to ease the growing pains of adding new experiments by other people, and enable widespread adoption.

Existing remote laboratories typically have trust issues, and it’s holding them back. Holding them back from becoming mainstream educational tools like virtual learning environments, exam marking systems, collaborative discussion forums, electronic voting systems and so on.

I’m not talking about how to trust (authenticate) users, because that is a solved problem. But rather, how to trust (authenticate) experiments. So far, most people connect up all their experiments behind a firewall, so that internal communications between experiments and management software can be trusted without further ado. Your carefully-developed experiments are right there where you can trust them to co-exist peacefully with each other and the management servers.

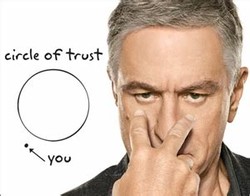

But you know just how badly things could go wrong if you let someone else put a bad experiment in there, and you are probably also worried about just how much work it would take to expand to a multi-site network where you can’t virtually add everyone to your protected sub-net. All of a sudden, you’ll end up acting like Jack Byrnes (Robert De Niro) in “Meet the Fockers” and your future collaborators will feel like this:

Before you know it, adding a new experiment to someone’s lab becomes as emotionally and logistically fraught as making an ill-judged attempt to pledge to a campus society. Whose rituals are now mostly banned, by the way.

Tim D.

Is there another way?

It’d be a whole lot better if someone could just get started by reading about it on the web, downloading some standard code, to run on some standard hardware, and try out being part of your lab, without you having to lift a finger. Sure, you might label them as “community tier” experimental supplier, to distinguish them from your own experiments running in some super-expensive server room with air-con and backup-power. But they got to play without any of the pledging nonsense.

Is this even possible? Sure it is. If the chocolate factory can go fully zero-trust then that proves the validity of the concept. It’s all about getting away from the castle-and-moat thinking.

Once the castle (sub-net) is full, you can’t grow anymore. So what do you do? Start over with a modern architecture that doesn’t trust (almost) anything, then you can grow until your heart is content.

| Limited-growth-model | Mainstream-model | |

| users | untrusted | untrusted |

| experiments | trusted | untrusted |

| management software | trusted | untrusted |

How?

I propose that the answer is to apply UMA2.0 (user-managed access) principles to the experiments. The experiments have to validate themselves to the system in a way that protects them and the system even if they are hosted in an office, or an airplane, and whether they are owned by someone you know well or someone you’ve never even spoken to. Even most of the management software is not trusted, in that we explicitly permit each helper to do exactly the management tasks we need and no more. Then lots of complementary approaches can live alongside each other, without any danger of a bad actor running amok inside the moat.

Conclusion:

Ironically, it’s a lot friendlier not to trust.